Alternative dataset for Code Understanding and Generation

TLDR; The Vault is a multilingual code-text dataset with over 40 million pairs covering 10 popular programming languages. It is the largest corpus containing parallel code-text data. By building upon The Stack, a massive raw code sample collection, The Vault offers a comprehensive and high-quality resource for advancing research in code understanding and generation. The dataset also comes with an accessible toolkit for supporting the community and encouraging customize and improvement.

Demand for large-scale resource parallel

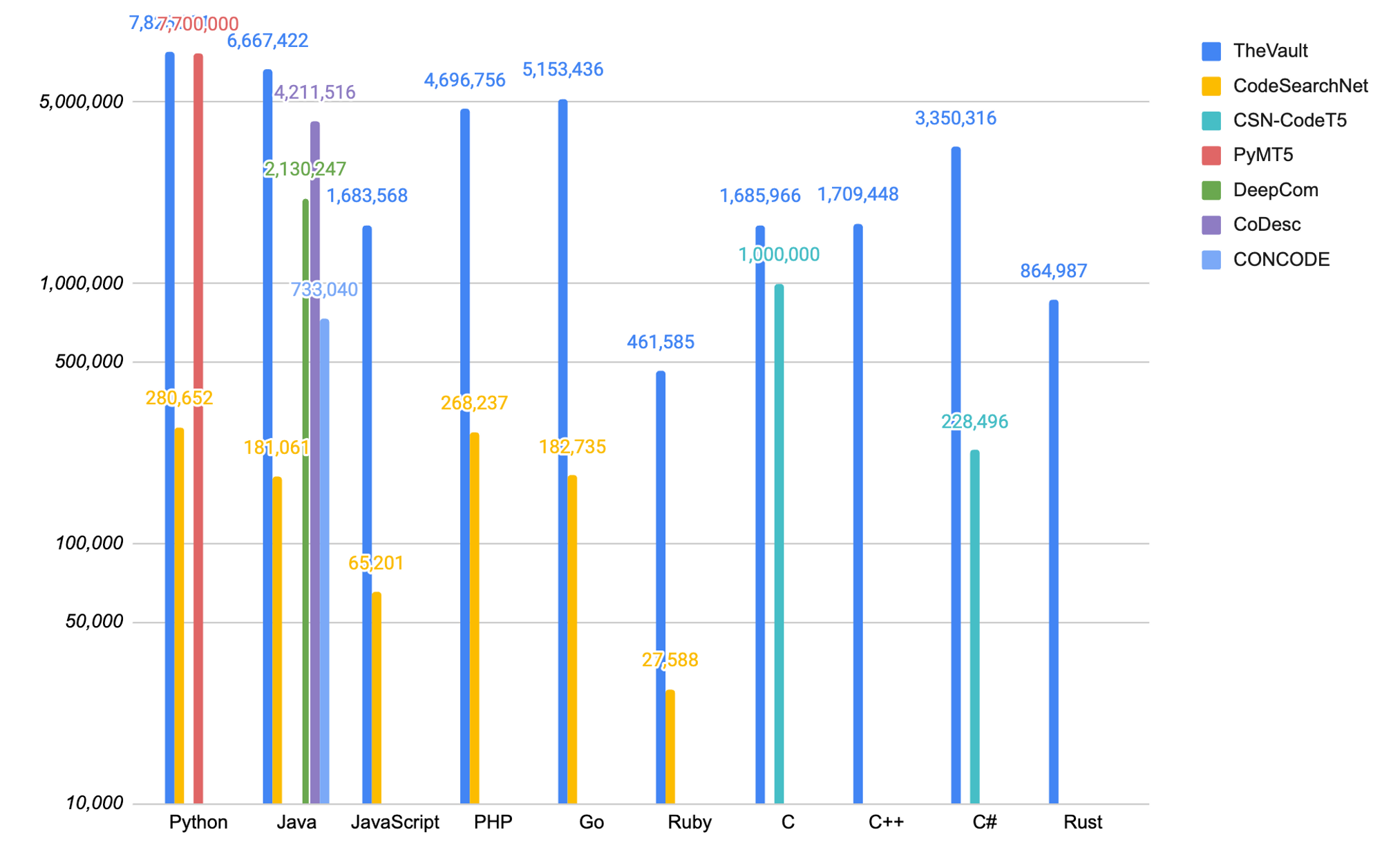

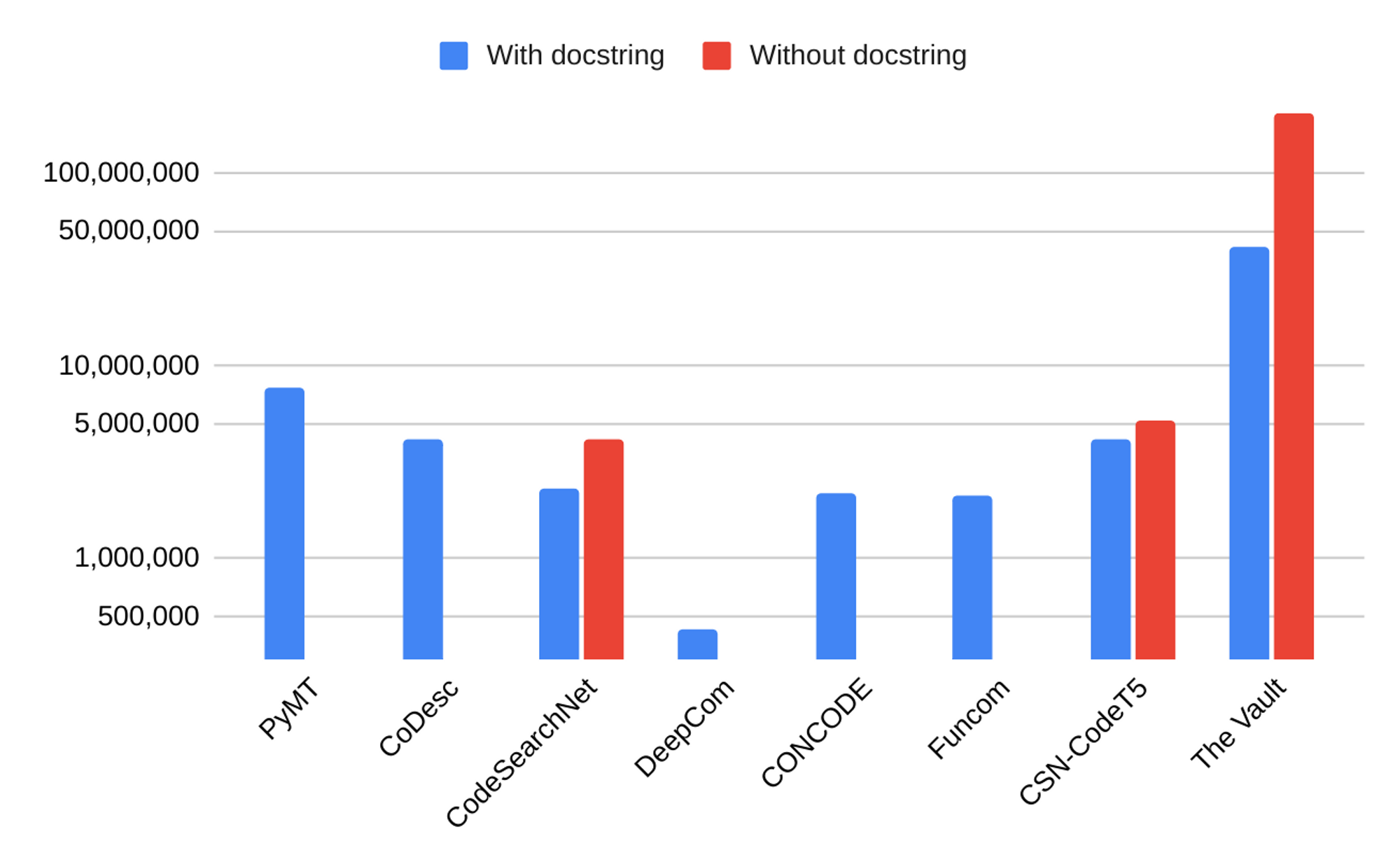

The urgency for a massive parallel dataset in the domain of AI for code (AI4Code) stems from the increasing need to improve the performance of large language models (LLMs) for code generation and understanding. Despite the proliferation of open-source code repositories, we found out that existing code-text pair datasets, like CONCODE, FunCom, and CodeSearchNet, are significantly smaller than raw code datasets (CodeParrot Github, The Stack, The Pile, etc).

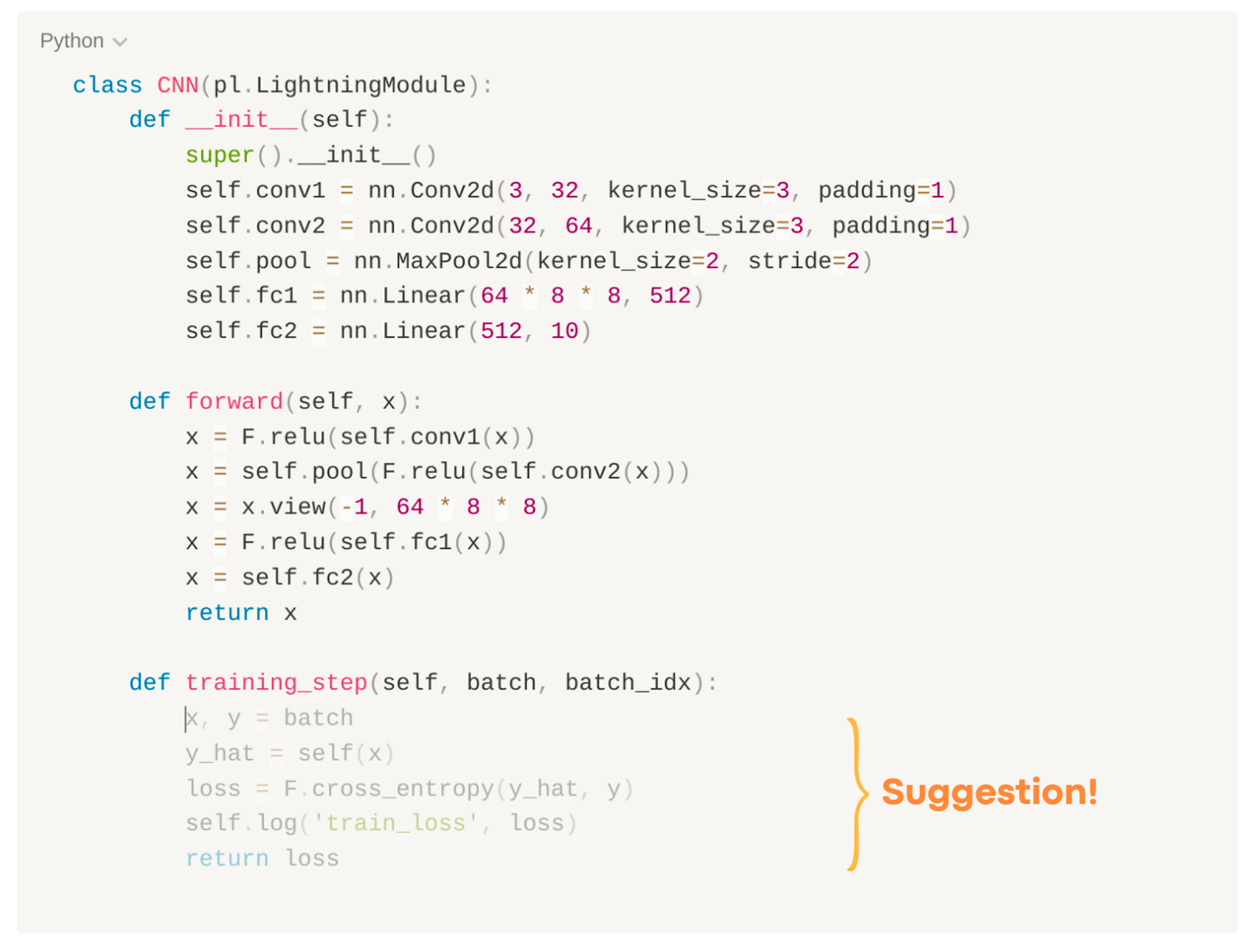

In our experiment, we believe this size disparity has become a bottleneck for training AI4Code LLMs that rely heavily on fine-tuning pre-trained models using parallel code-text datasets. While models using non-parallel data or raw source code files are growing rapidly, those using code-text pair data (or bimodal) and chunked unimodal data (which only contain a certain level of code snippet) are constrained by data size.

Furthermore, existing datasets like CoDesc, PyMT5, etc are limited to specific programming languages, underscoring the need for more diverse and larger datasets. Additionally, data quality plays a significant role in enhancing AI model performance. Therefore, the introduction of a large, high-quality dataset that spans multiple programming languages could greatly encourage the advancement of AI4Code LLMs.

What does The Vault provide?

CoDesc highlights the challenges faced by many tasks in the domain AI4Code, primarily the difficulty due to the lack of a standard noise removal method besides the lack of large standard datasets suitable for training deep neural models. To address those obstacles, we present The Vault, an extensive parallel dataset of code and docstring/comment. Our aim is to contribute to the community by providing a vast resource for training and evaluating natural language processing models related to code.

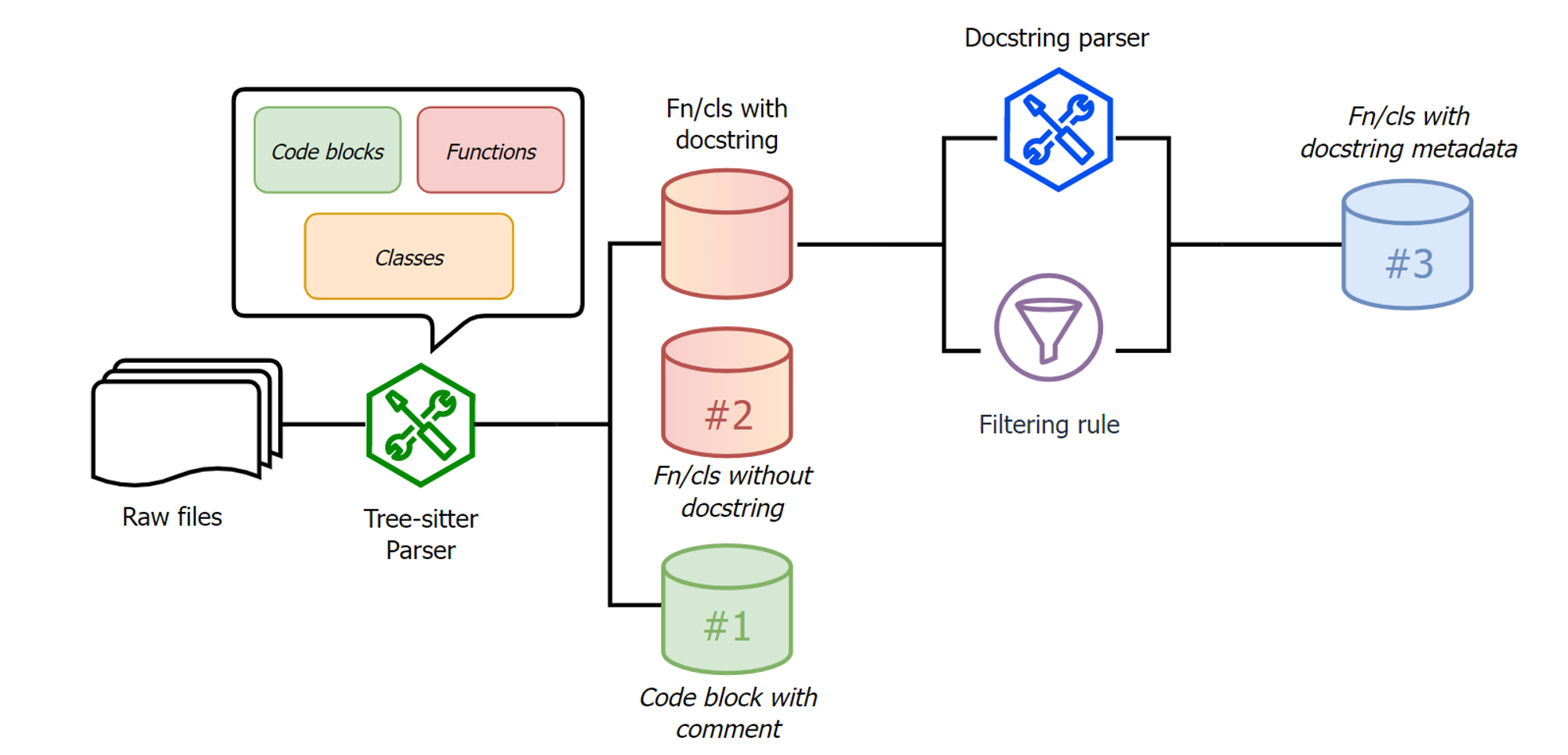

Additionally, we also openly share our cleaning techniques and pipeline to extract data from raw code files, which involve careful design and analysis to enhance the data quality and optimize the performance in downstream tasks. When we design our cleaning rule, besides the 13 heuristics rule to eliminate un-informative data, we also apply a deep learning approach is utilized to refine the matching score between the parallel elements of The Vault.

Our dataset and code are available to the community, and can easily access via Github and HuggingFace. The toolkit can support the community in various aspects of code extraction, parsing, filtering, and cleaning:

Key features:

- Code parser: the package using tree-sitter to provide an easy tool to extract function, class, and comment from source code.

- Docstring parser: Parse the docstring to acquire information such as summary comment, parameters, return type, docstring styles, etc.

- Filtering module: the module that integrates 13 rule-based methods to clean the docstring.

What Tasks can be constructed using The Vault?

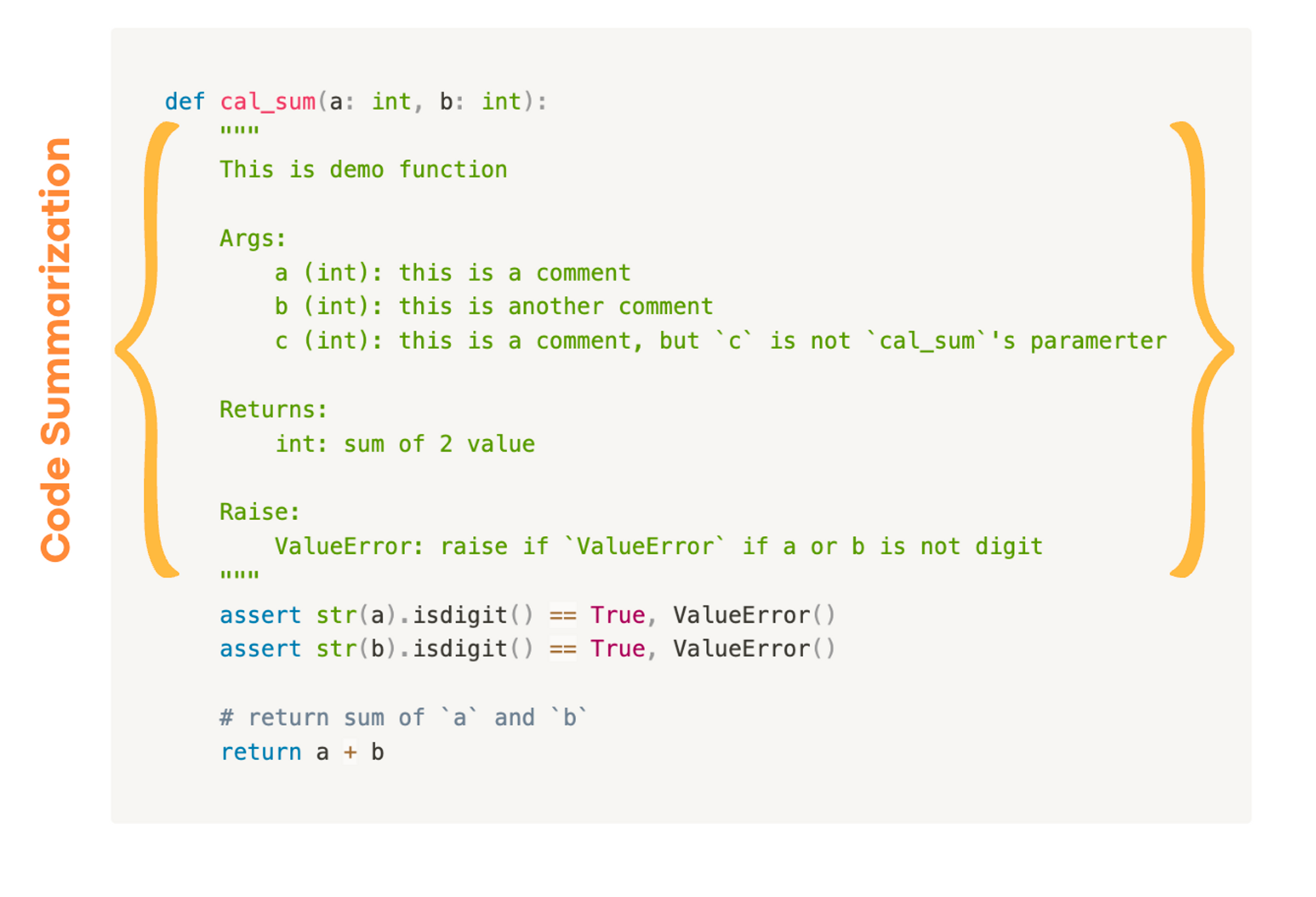

Code summarization

Code summarization aims to generate human-readable descriptions which are meaningful and accurately describe the purpose or function of given code snippets. The input is a piece of code, and the output is a concise summary explaining its functionality.

Traditionally, models are trained on parallel datasets containing code and corresponding comments or descriptions. However, these datasets can be noisy and limited, with descriptions that can be ambiguous, incomplete, or even misleading. In The Vault, we carefully design a cleaning method to capture all the informative sections of the docstring/description in order to boost model performance in the summarization task.

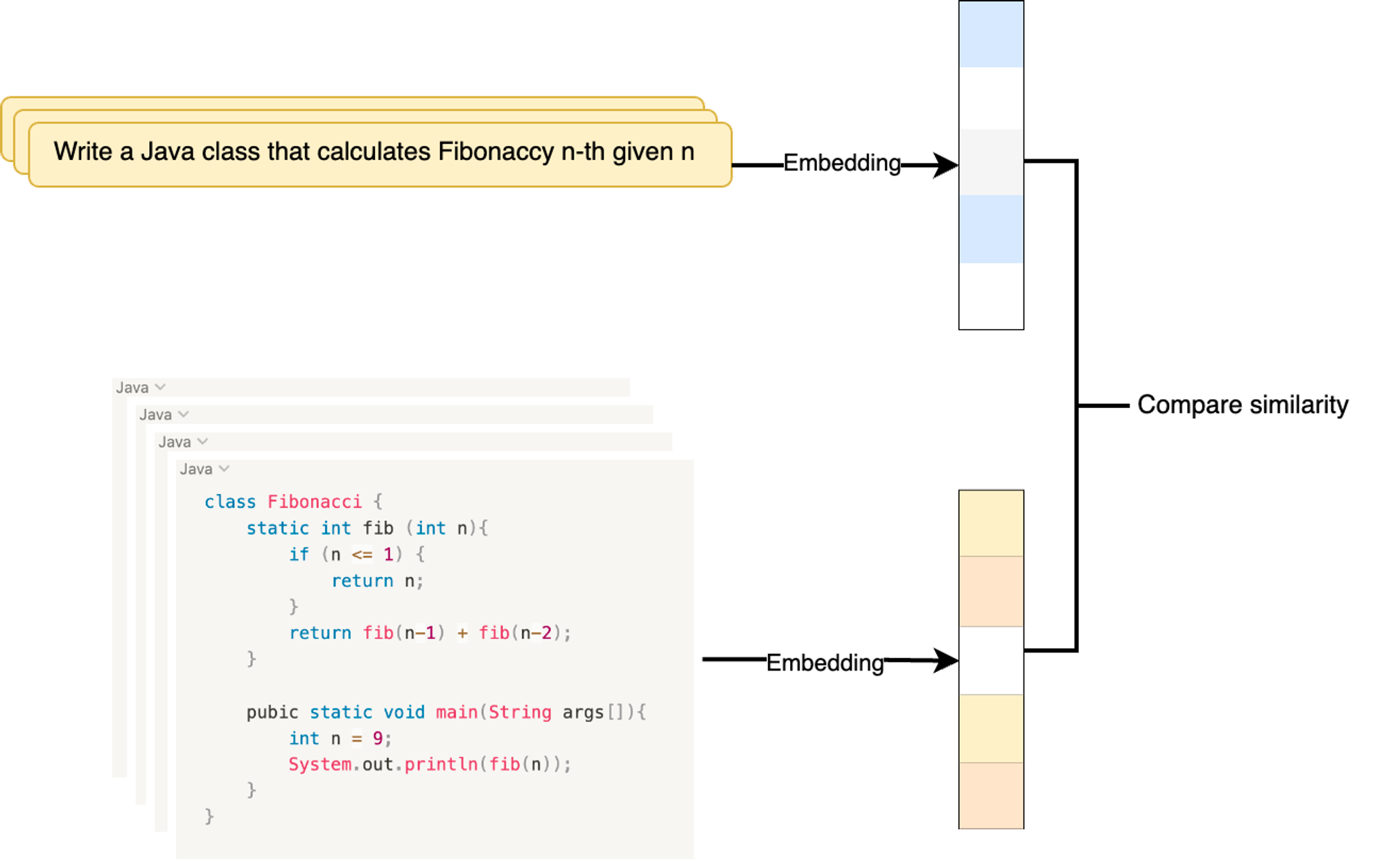

Code search

Code search is the task of retrieving relevant code snippets given a natural language query. The input is a query in natural language, and the output is a set of code snippets that satisfy the query. Models for this task are typically trained or fine-tuned using code and associated natural language descriptions from parallel datasets where both will be embedded into a vector space and compare similarities with each other.

However, the effectiveness of a code search system heavily depends on the quality and diversity of its training data. Therefore, a well-curated, large, and diverse parallel dataset can significantly reduce this challenge and boost the model’s understanding of the relationship between code and natural language, resulting in better performance.

Code generation

Code generation, or text-to-code, involves creating code based on a natural language description. The input is a description of a desired functionality, and the output is the code that performs that function. Models for this task are usually trained or fine-tuned on parallel datasets that contain pairs of natural language descriptions and their corresponding code. However, the existing datasets are often limited in their coverage of programming languages and can contain noisy or low-quality examples. A large, diverse, and high-quality parallel dataset can provide a broader range of examples and contexts, thereby improving the model’s ability to generate syntactically and semantically correct code across various languages and tasks.

More advanced problems

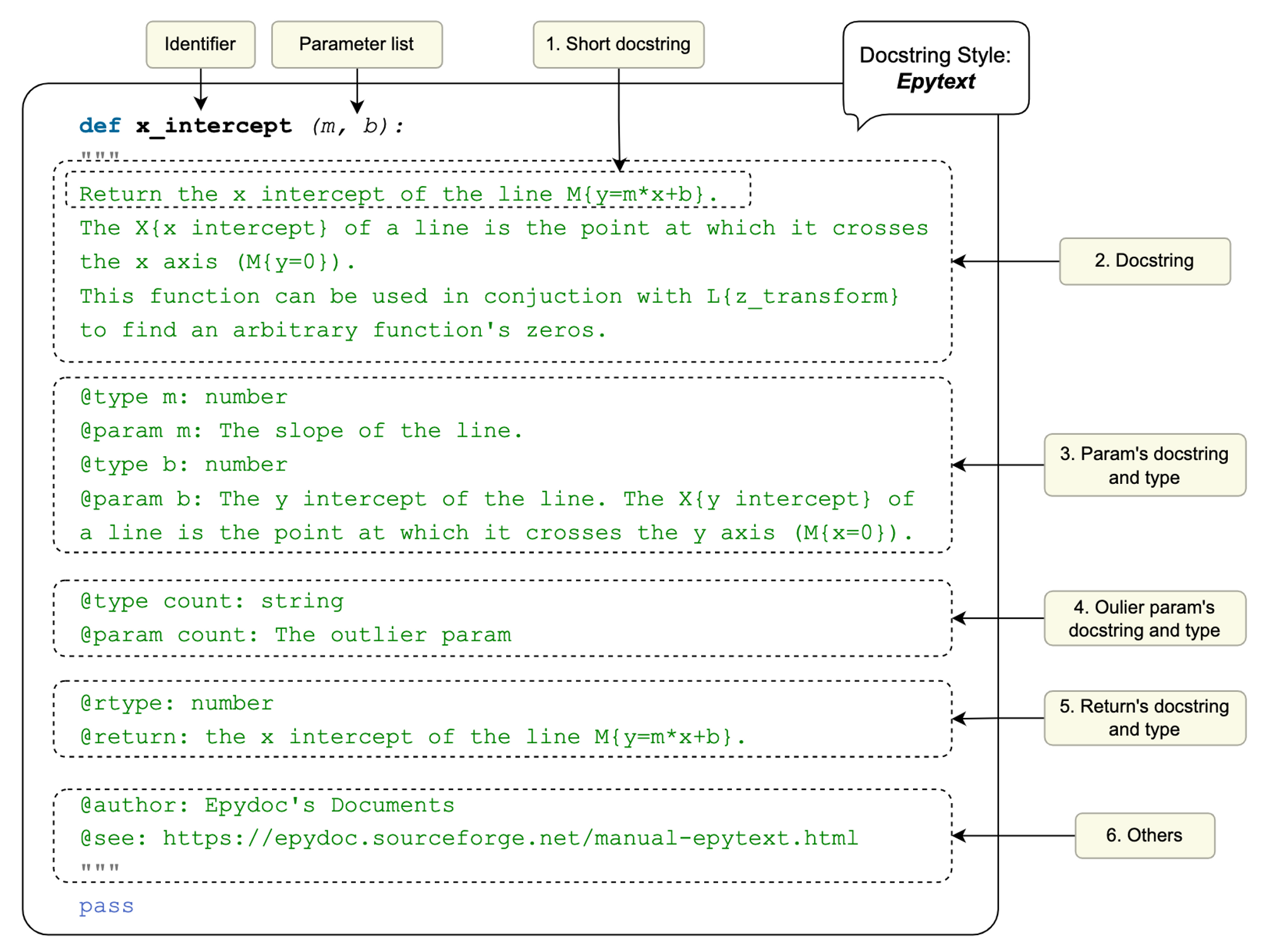

When extracting the Vault, our focus extends beyond extracting parallel elements from the source code. We make an effort to extract as much metadata as possible, including function/class information. The metadata obtained from The Vault dataset, such as parameter descriptions and types found in docstrings, can be utilized to generate more detailed and informative code summaries. By leveraging this information, models can provide insights into the purpose, expected inputs, and outputs of the suggested code, enhancing the summarization process.

To extract docstring metadata, we have developed a docstring style parser capable of extracting information from each style. By leveraging the specific conventions and patterns used in various programming languages, models can gain a deeper understanding of these languages. This approach facilitates knowledge transfer and code comprehension across different programming paradigms, bridging the gap between them.

Data characteristics inside The Vault

In this exciting new dataset, we have delved into the world of programming languages to uncover some fascinating insights.

Comparison with Existing Benchmarks

The Vault stands out from existing datasets due to its extensive coverage of programming languages and its substantial size. The comparison is illustrated in the figure below.

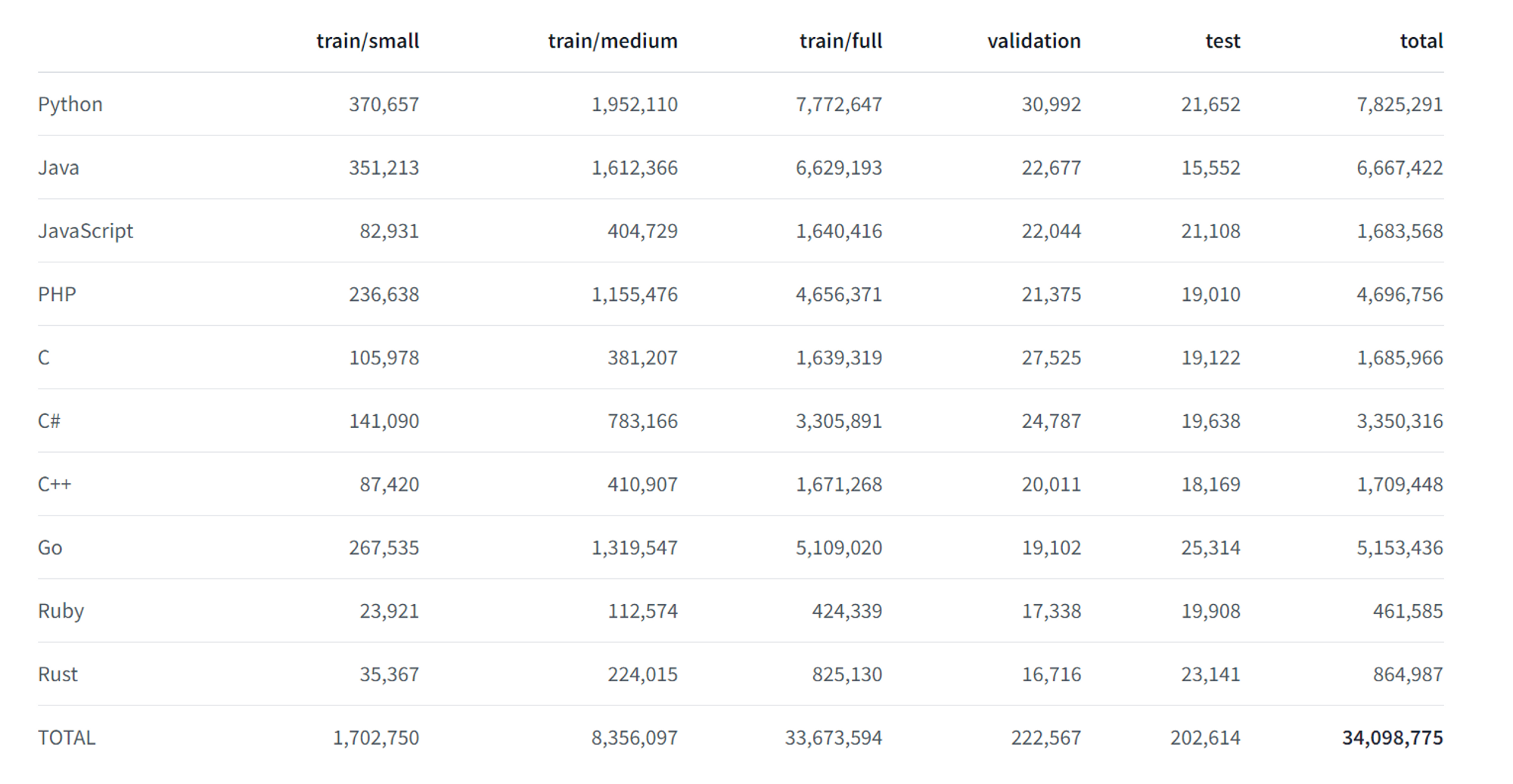

After deduplication, we achieve over 34M pairs, which still significantly surpasses the scale of existing datasets. We split the data into 3 training sets (small, medium, and full), a validation set, and a test set.

Data characteristics distributions

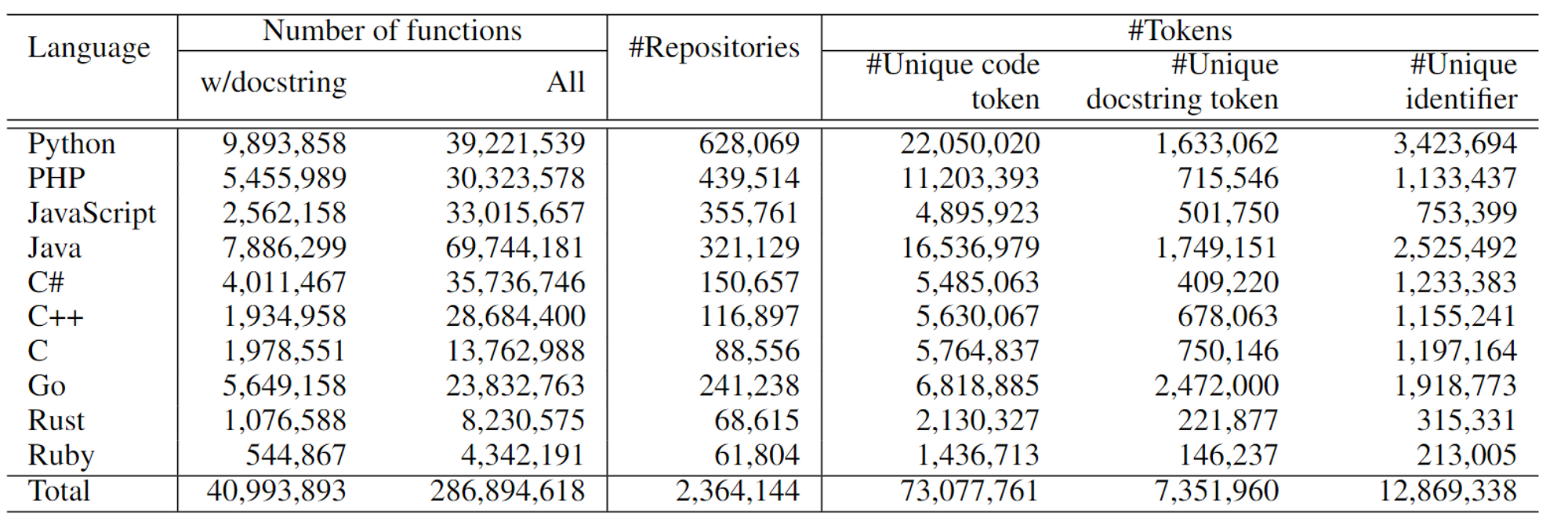

The table above provides information about the dataset, highlighting the prevalence of Python and Java programming languages and the abundance of tokens within the dataset. Python and Java are the dominant languages in the dataset, indicating their popularity and widespread usage. The dataset encompasses a significant number of tokens, indicating a substantial amount of code and associated text.

In analyzing each sample, the average token length for code hovers around 100 tokens, while docstrings exhibit remarkable brevity with an average of only 15 tokens.

Docstring styles

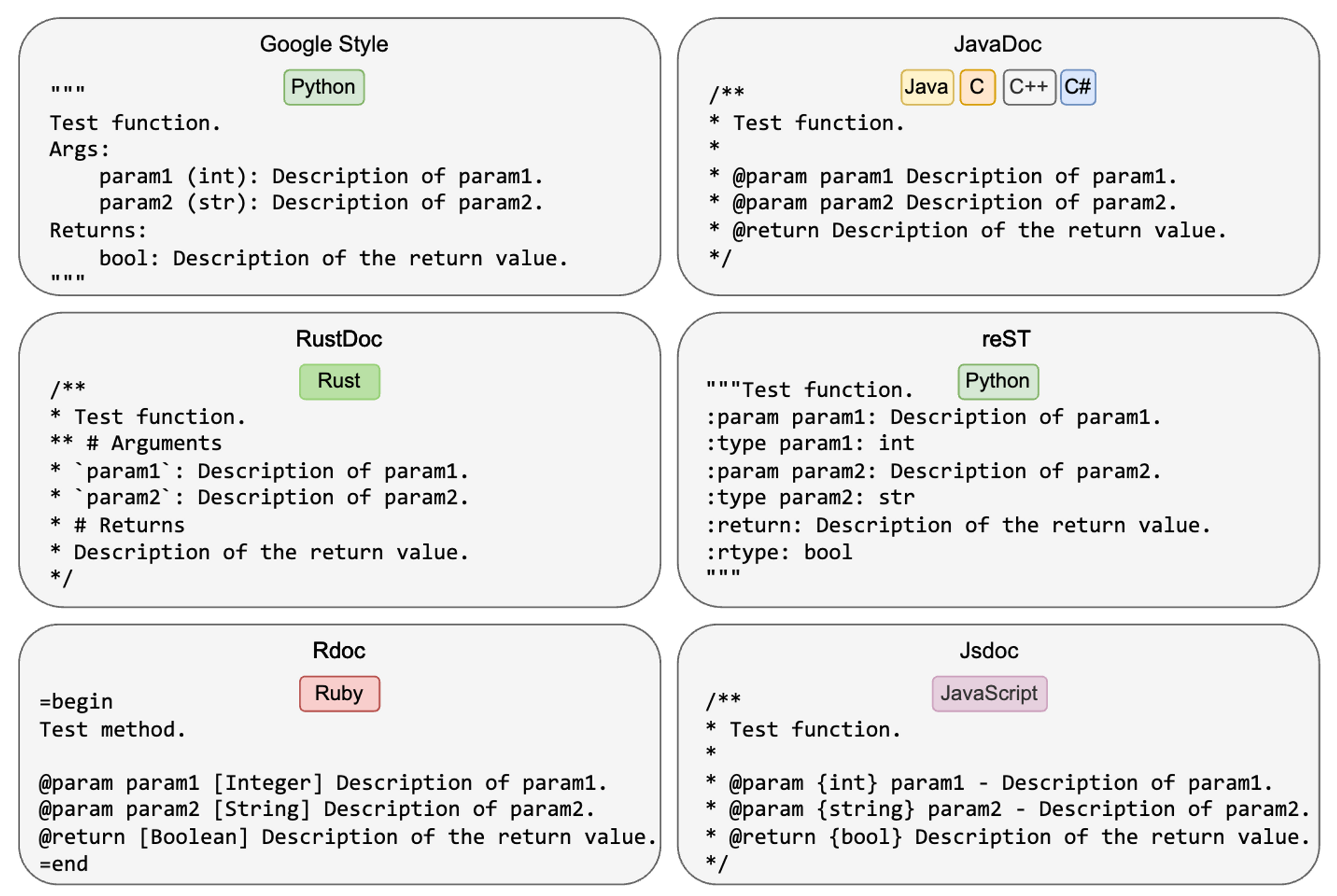

Alongside typical docstrings that provide brief descriptions of the source code, many adhere to formatting and style conventions like Google, Jsdoc, and reST styles, among others.

With our carefully designed toolkit, we have harnessed the power to parse these docstrings and extract valuable metadata, all while supporting a remarkable 11 prevalent docstring styles.

Through extensive statistical analysis, we have gained insights into the prevalence of styled docstrings within the larger code-text dataset. It is fascinating to note that these styled docstrings constitute only a fraction of the entire corpus. However, this seemingly small fraction opens up a world of possibilities for advanced research. Imagine the potential of controlling docstring style during generation or crafting nuanced explanations for function parameters. This rich dataset provides a fertile ground for exploring and tackling these complex challenges.

Unleash Your Curiosity and Explore Further

We encourage you to explore the concepts and ideas presented in this blog post in more detail. You can find additional information through the resources provided below.

Paper: More details about The Vault can be found in our research paper.

GitHub: Check out our source code and toolkit HERE.

Huggingface: Check out The Vault on HuggingFace hub.

Feedback or Questions: Contact us at support.ailab@fpt.com.

Acknowledgments

We are grateful to have the support of the FPT Software AI Center in funding this project. For more details about our organization and its initiatives, please visit https://www.fpt-aicenter.com/about/.